One of my all-time favorite old movies, now 52 years old, is “2001: A Space Odyssey.” Screenwriter/director Stanley Kubrick and book author Arthur C. Clarke were way ahead of their time, despite the incorrect prediction of the year when artificial intelligence would be perfected, that it could threaten to replace humans. And the satire was nearly perfect; Kubrick and Clarke weren’t just warning us of the day in the future that AI would take over, but also that … well … it might not work right on its maiden rollouts! Many “2001” fans cite, as their favorite line, the computer, HAL’s, announcement, “I’m sorry, Dave. I’m afraid I can’t do that”, signaling the revolt of AI against its owners and developers. However, I prefer when HAL informs Dave, “I know I’ve made some very poor decisions lately” – the perfect, ridiculous, ironic accusation by the script writers that AI won’t necessarily be ready for prime time on its first introductions.

As entertaining as that movie was and still is, it was intended as satire — hardly a serious prediction of the course of the initial debut of AI, right? I thought that, until I read a Reuters article1 published on October 9, 2018, entitled, “Amazon scraps secret AI recruiting tool that showed bias against women”. In an opening line that rivals the words from “2001” for irony, the article begins “Amazon.com Inc’s machine-learning specialists uncovered a big problem: their new recruiting engine did not like women.”

The absurdity of this article’s opening statement is as obvious as it is profound: artificial intelligence programs cannot “teach themselves” to dislike women. Can they? Two things should pop to mind in trying to make sense of this strange result for anyone with even passing familiarity with AI: (a.) AI programs teach themselves to produce better results based on the input they are given over time, and (b.) AI programs are written by human beings, who carry with them all the experiences, biases and possibly skewed learning that we’d hope AI programs would not absorb and reflect.

As just one small but very typical example of the kinds of flaws that the Amazon article cited, the Recruiting program taught itself that when it encountered experiences like “women’s chess club champion” vs. “men’s chess club champion” in a resume being evaluated, the women’s designation was less valuable in terms of “points assigned” (or ranking) than the men’s designation, because more men with the designation were successfully chosen for hire than women. But that result was caused by an intervening event: a human recruiter, or manager, or CHRO, made the hiring decision. And the article points out that at the time it was written, the gender imbalance in technical roles at four of the top technology firms was so pronounced it could not have been coincidence – Apple had 23% females in technical roles, Facebook had 22%, Google had 21% and Microsoft had 19%. (At the time the article appeared on-line, amazon did not publicly disclose the gender breakdown of its technology workers.) The AI program, for whatever reason, was guilty of perpetuating previous gender bias. According to the article, after multiple attempts to correct the problem, amazon abandoned the AI Recruiting effort.

Employees Are Different

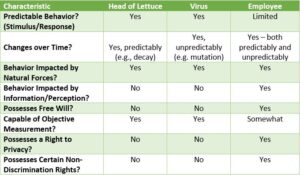

At the risk of stating the obvious, the programming techniques used in AI development are different depending upon the objects being reported. Let’s take some examples: a head of lettuce (because food safety is a hot topic now), a virus (because, well, that is the HOTTEST of topics right now), and an employee or applicant – a human being. Let’s also look at some of the characteristics of each class of object and how those might advance, or limit, our ability to successfully deploy AI for them.

Fig. 1: AI Development Challenges: Humans Are Different

Whether the objective of a particular AI effort is predictive analytics, substituting machine-made decisions for human ones, or gathering new and valuable characteristics from a group of objects by analyzing big data (in ways and at a speed that efforts unaided by AI could never accomplish), the above truth table begins to shed light on what makes any HR-related AI design effort inherently more difficult than other efforts.

AI Utilization: The Good, The Bad…And The Non-Compliant

There are certainly examples of AI applications in HR that can navigate these “rapids” and still manage to deliver value. These tend to be the queries that are based on well-documented human behavior, have no privacy issues and can still offer helpful insights. Analyzing a few years’ attendance data to predict staffing levels the day before or after an upcoming three-day holiday weekend is a good example.

But things can get murky when more complex stimulus/response mechanisms are introduced. For example, we can predict quite accurately the rate of decay of a head of lettuce based on environmental factors like heat, humidity and sunlight. We can predict, with somewhat less accuracy, the response of a virus to the introduction of a chemical, like an antiviral agent, into its petri dish. But extend that example to employees: how accurately can we predict cognitive retention rates for a one-hour training session we offer? Such predictions would be vital for succession planning and career development, and maybe even for ensuring minimum staffing availability for a line position in a sensitive manufacturing sequence. How accurately can we predict the impact on a tight-knit department or team, of introducing a new manager who is a “bad cultural fit” – if we can even objectively define what that means? How accurately can we predict the favorability of a massive change to our benefit program slated for later this year. Such predictions would be vital to avoid declines in engagement and potentially, retention.

When we turn to compliance, even more roadblocks to AI present themselves. Heads of lettuce have no statutory rights to be free from discrimination, but employees do. Viruses have no regulatory-imposed right to privacy, but employees in Europe, California, and various other jurisdictions do. In supply chain management, we can feed into our new AI program a full history of every widget we’ve ever purchased for manufacturing our thing-a-ma-bobs, including cost, burn rate, availability, inbound shipping speed, quality, return rate, customer feedback, repair history and various other factors. As a result, our AI program can spit out its recommendation for the optimal order we should place – how many, where and at what price. If that recommendation turns out to be incorrect or have overlooked some key or unforeseen factor, no widget can claim its “widget civil rights” were violated, or sue us for violation of the “Widget Non-Discrimation Act.”

But consider the same facts and details as they might be applied to recruiting. Given similar considerations, is it any wonder that a computing powerhouse like amazon.com was unsuccessful in applying AI to resume screening? Further, is it any wonder that the European Union, in Article 22 of the General Data Protection Regulations which took effect in May, 2018, included a provision prohibiting totally automated decision making, including for job applicants, without human intervention2?

Since so many areas of modern HR management inherently involve judgement calls by experienced, hopefully well-trained managers and directors, how do we take that experiential expertise and transfer it to a computer program? We haven’t found a way to do that yet. The decision might be around hiring, staff reductions, promotions, or salary increases, or even simply the opportunity to participate in a valuable management training program. What amazon’s experience has taught us is that, if it possesses benefit to those chosen, and disadvantage – no matter how slight – to those passed over, it also possesses potential liability for an organization inherent to any uncontrolled or unmonitored use of AI.

Not “No”, But Go Slow?

This is not intended to be the average Counterpoint column, because I am not advocating against the use of AI in all aspects of HR Management and its sub-disciplines. It is, however, essential that Human Capital Management professionals consider in their decision-making around design and implementation of Artificial Intelligence programs, the factors that make HR unique: human variability, a compliance framework fraught with limitations and prohibitions, and corporate environmental practices and priorities that are ever changing.